Introduction

Artificial Intelligence (AI) and Machine Learning (ML) have transformed numerous industries, ranging from healthcare and finance to transportation and entertainment. These technologies promise unprecedented advancements in efficiency, productivity, and innovation. However, the rapid growth of AI and ML brings with it a host of ethical and regulatory challenges that cannot be ignored. From questions about bias in algorithms to the potential for job displacement and concerns over privacy, AI and ML technologies raise fundamental ethical issues that need to be addressed.

Simultaneously, governments and regulatory bodies worldwide are grappling with how to effectively regulate AI and ML while fostering innovation. The challenge lies in balancing the benefits of these technologies with the need to protect individuals’ rights and ensure fairness. This article explores the ethical dilemmas surrounding AI and ML, the role of regulation, and potential pathways forward to create responsible and accountable AI systems.

1. Understanding AI and ML: A Brief Overview

Before diving into the ethical and regulatory challenges, it’s important to understand the basic concepts of AI and ML.

- Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and act like humans. AI systems can be classified into two categories:

- Narrow AI: AI designed to handle specific tasks, such as facial recognition, natural language processing, and recommendation systems.

- General AI: AI with the ability to perform any intellectual task that a human can do. This type of AI remains largely theoretical at present.

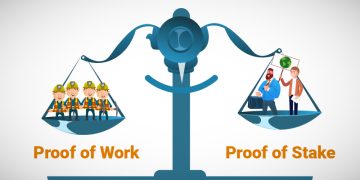

- Machine Learning (ML) is a subset of AI that enables machines to learn from data without being explicitly programmed. ML algorithms improve over time through exposure to more data, which is often categorized as:

- Supervised Learning: The model is trained on labeled data to make predictions.

- Unsupervised Learning: The model learns patterns in data without predefined labels.

- Reinforcement Learning: The model learns through rewards and penalties based on its actions.

These technologies are foundational to innovations in robotics, data analytics, autonomous vehicles, healthcare, and beyond.

2. Ethical Challenges in AI and Machine Learning

As AI and ML technologies are integrated into various aspects of our daily lives, several ethical issues have arisen. Some of the most pressing concerns include:

a. Algorithmic Bias and Discrimination

One of the most significant ethical challenges in AI and ML is the potential for algorithmic bias. Machine learning algorithms learn patterns from data, and if the data used to train these systems is biased, the algorithm will likely replicate these biases. This can lead to discriminatory outcomes, especially in sensitive areas such as hiring, law enforcement, lending, and healthcare.

- Example: AI systems used in recruitment have been found to favor male candidates over female candidates due to biases present in historical hiring data. Similarly, predictive policing algorithms have been criticized for disproportionately targeting minority communities because of biased training data.

Key Point: Addressing algorithmic bias is critical to ensuring that AI and ML systems are fair and equitable. Transparency in data collection, diversity in training datasets, and continuous monitoring for bias are essential steps in combating this issue.

b. Privacy Concerns

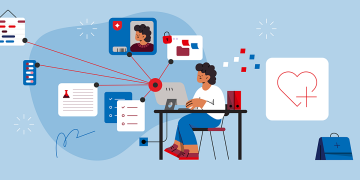

AI and ML technologies often rely on large datasets, many of which contain sensitive personal information. This raises significant privacy concerns, especially when personal data is collected without individuals’ informed consent or is used in ways they did not anticipate.

- Example: Facial recognition technology, used by law enforcement agencies and private companies, has sparked controversy over its potential for mass surveillance and the violation of individuals’ privacy rights.

Key Point: To mitigate privacy risks, AI and ML systems must adhere to stringent data protection regulations, such as the General Data Protection Regulation (GDPR) in the EU, which provides guidelines for how personal data should be handled and stored.

c. Accountability and Transparency

As AI and ML systems become more complex, there is growing concern over their opacity, or lack of transparency. The “black-box” nature of many AI systems makes it difficult for individuals to understand how decisions are made. This lack of transparency is particularly problematic in high-stakes areas like healthcare, criminal justice, and finance, where decisions made by AI systems can have significant consequences for individuals’ lives.

- Example: In the case of autonomous vehicles, if an AI system makes a decision that results in an accident, it can be challenging to determine who is responsible— the manufacturer, the developer, or the AI system itself.

Key Point: AI systems must be designed to be transparent and explainable, allowing individuals to understand how decisions are made. This is essential for ensuring accountability and fostering trust in AI technologies.

d. Impact on Employment

The automation enabled by AI and ML has the potential to displace millions of jobs across various industries, from manufacturing and retail to customer service and transportation. While some argue that AI will create new jobs and industries, the rapid pace of technological change poses a threat to workers whose skills are not easily transferable.

- Example: The rise of autonomous vehicles threatens to disrupt jobs in trucking, delivery services, and taxi industries.

Key Point: Policymakers must consider the social and economic impact of AI-driven job displacement and implement strategies to help workers transition to new roles, such as through reskilling programs and social safety nets.

3. Regulatory Landscape for AI and ML

The rapid development of AI and ML technologies has outpaced the creation of regulatory frameworks, leaving policymakers scrambling to catch up. While some countries have begun implementing regulations, the global nature of AI presents challenges in creating consistent and effective rules.

a. National Regulations

Countries around the world are starting to develop AI-specific regulations, though the scope and approach vary significantly. Some examples include:

- European Union: The EU’s Artificial Intelligence Act, proposed in 2021, aims to create a comprehensive regulatory framework for AI. The Act categorizes AI systems based on their risk levels and proposes strict requirements for high-risk systems, such as facial recognition and biometric data processing.

- United States: In contrast, the U.S. has taken a more fragmented approach, with states like California passing their own AI laws. Federal agencies like the Federal Trade Commission (FTC) and the National Institute of Standards and Technology (NIST) have issued guidelines, but no comprehensive federal regulation exists.

Key Point: While national regulations are important, international cooperation is essential to ensure that AI is governed in a way that promotes ethical standards and fosters innovation globally.

b. Ethical Guidelines and Frameworks

In addition to formal regulations, various organizations and bodies have created ethical guidelines for AI development. Notable initiatives include:

- OECD Principles on AI: The Organisation for Economic Co-operation and Development (OECD) has issued guidelines for the responsible development and use of AI, emphasizing fairness, transparency, accountability, and respect for human rights.

- IEEE Ethics in AI: The Institute of Electrical and Electronics Engineers (IEEE) has developed a framework for ethical AI, focusing on ensuring that AI systems prioritize human well-being and dignity.

Key Point: Ethical guidelines help shape the development of AI systems and provide developers with a framework to consider potential risks and consequences before deployment.

c. The Role of International Cooperation

Given the global nature of AI and its widespread impact, international collaboration is critical to establishing universal ethical standards and regulations. Efforts such as the G7 AI Principles and the UN’s AI Governance Framework aim to create global consensus on the ethical use of AI.

Key Point: Collaboration among nations and international organizations will be essential to addressing the complex ethical and regulatory challenges posed by AI and ensuring that these technologies benefit all of humanity.

4. Pathways Toward Ethical AI and ML Regulation

To address the ethical challenges and regulatory gaps in AI and ML, several actions can be taken:

a. Designing Fair and Inclusive Algorithms

One of the first steps in addressing the ethical concerns surrounding AI is ensuring that algorithms are designed to be fair and inclusive. This involves:

- Using diverse datasets to train AI systems, ensuring that the models reflect a wide range of perspectives and experiences.

- Regular audits to detect and correct biases in algorithms.

- Involving ethicists and social scientists in the design and development of AI systems.

b. Strengthening Privacy Protection

Robust privacy laws and data protection regulations are essential to safeguard individuals’ personal information. These regulations must ensure that data collection practices are transparent, individuals’ consent is obtained, and personal data is handled responsibly.

c. Promoting AI Transparency and Accountability

Developers should be required to make AI systems explainable and transparent. Governments should mandate that AI systems include audit trails and decision logs, which can be reviewed to ensure that decisions are made fairly and consistently.

d. Investing in Workforce Reskilling

Governments should invest in programs that provide reskilling and retraining opportunities for workers whose jobs are at risk due to AI-driven automation. This will help ease the social and economic disruptions caused by job displacement.

5. Conclusion

As AI and ML technologies continue to evolve, the ethical and regulatory challenges they pose will only become more complex. To ensure that these technologies are used responsibly and ethically, a multi-stakeholder approach is necessary. Governments, tech companies, researchers, and civil society must work together to create regulations that protect individuals’ rights while fostering innovation.

The key to achieving a balanced approach lies in creating clear, fair, and transparent regulations that prioritize human dignity, privacy, and fairness. By focusing on responsible AI development, we can harness the potential of AI and ML to improve society, while ensuring that these technologies are used ethically and equitably.